Episodes

Monday Jul 12, 2021

Monday Jul 12, 2021

Auditory and visual information are processed differently by the brain, especially when it comes to space. In vision, the retina senses the locations of images with respect to where the eyes are pointing. In hearing, the cues our brains use to localize sound tell us where the sound is positioned with respect to the head and ears. How then do we perceive space as unified? In particular, how do our brains compensate for eye movements that constantly shift the relationship of the visual and auditory scenes?

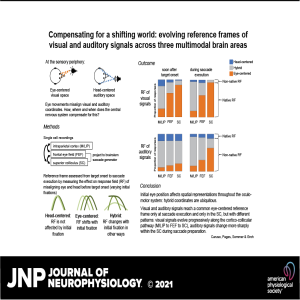

In this podcast Editor in Chief Nino Ramirez and author Jennifer Groh discuss the manuscript titled “Compensating for a shifting world: evolving reference frames of visual and auditory signals across three multimodal brain areas” by Caruso et al. Models for visual-auditory integration posit that visual signals are eye-centered throughout the brain, while auditory signals are converted from head-centered to eye-centered coordinates. In the manuscript they show instead that both modalities largely employ hybrid reference frames: neither fully head- nor eye-centered. Across three hubs of the oculomotor network (intraparietal cortex, frontal eye field, and superior colliculus) visual and auditory signals evolve from hybrid to a common eye-centered format via different dynamics across brain areas and time.

Valeria C. Caruso, Daniel S. Pages, Marc A. Sommer, and Jennifer M. Groh

#neuroscience @jmgrohneuro

Check out the article here: https://doi.org/10.1152/jn.00385.2020

Join APS today-https://www.physiology.org/community/aps-membership

No comments yet. Be the first to say something!